Modeling Natural Language with Transformers: Bert, RoBERTa and XLNet. – Cloud Computing For Science and Engineering

![PDF] Contextualized Embeddings based Transformer Encoder for Sentence Similarity Modeling in Answer Selection Task | Semantic Scholar PDF] Contextualized Embeddings based Transformer Encoder for Sentence Similarity Modeling in Answer Selection Task | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/3bb54a4663da3ab3b5766c61fb9025348bce2182/3-Figure1-1.png)

PDF] Contextualized Embeddings based Transformer Encoder for Sentence Similarity Modeling in Answer Selection Task | Semantic Scholar

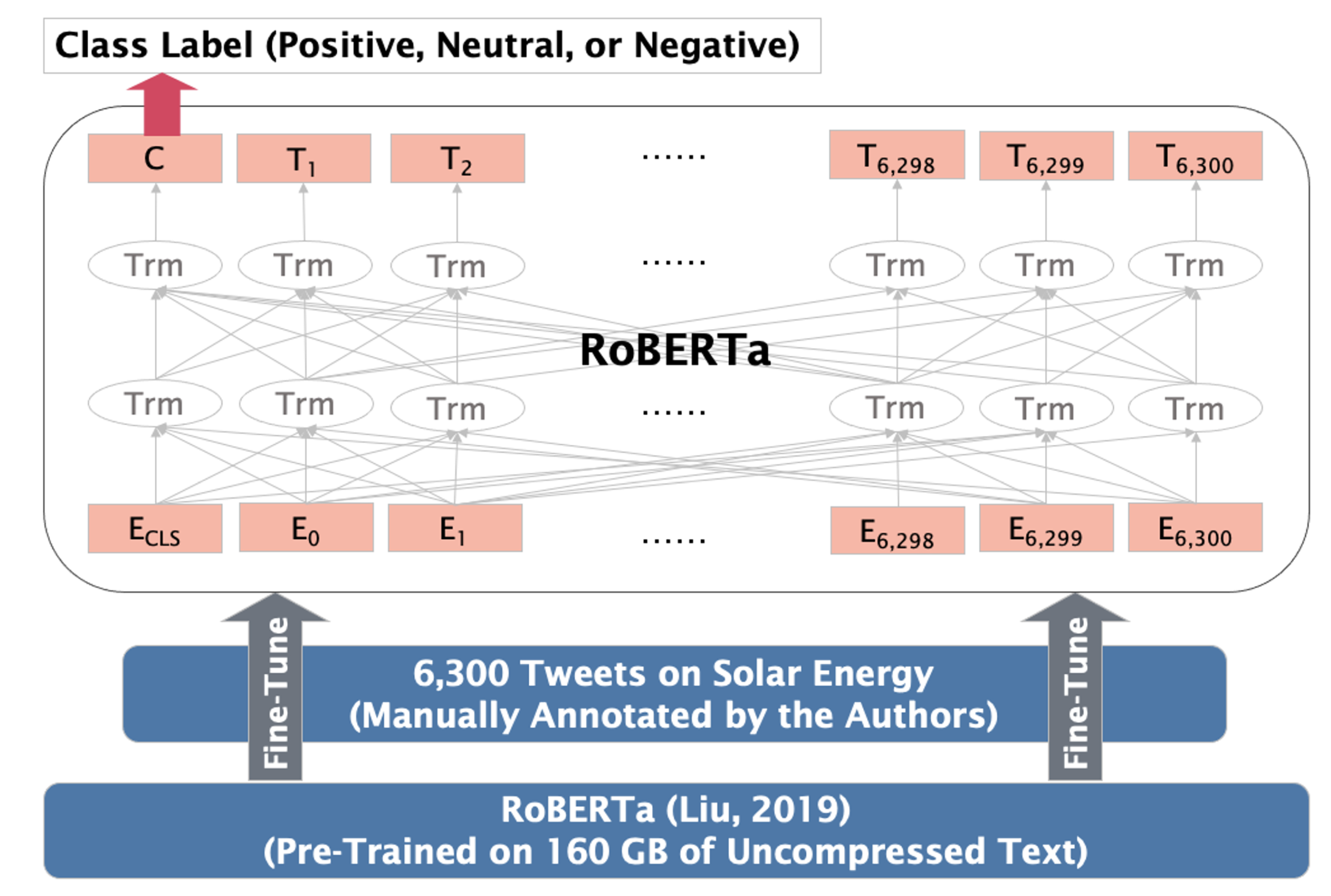

Sustainability | Free Full-Text | Public Sentiment toward Solar Energy—Opinion Mining of Twitter Using a Transformer-Based Language Model | HTML

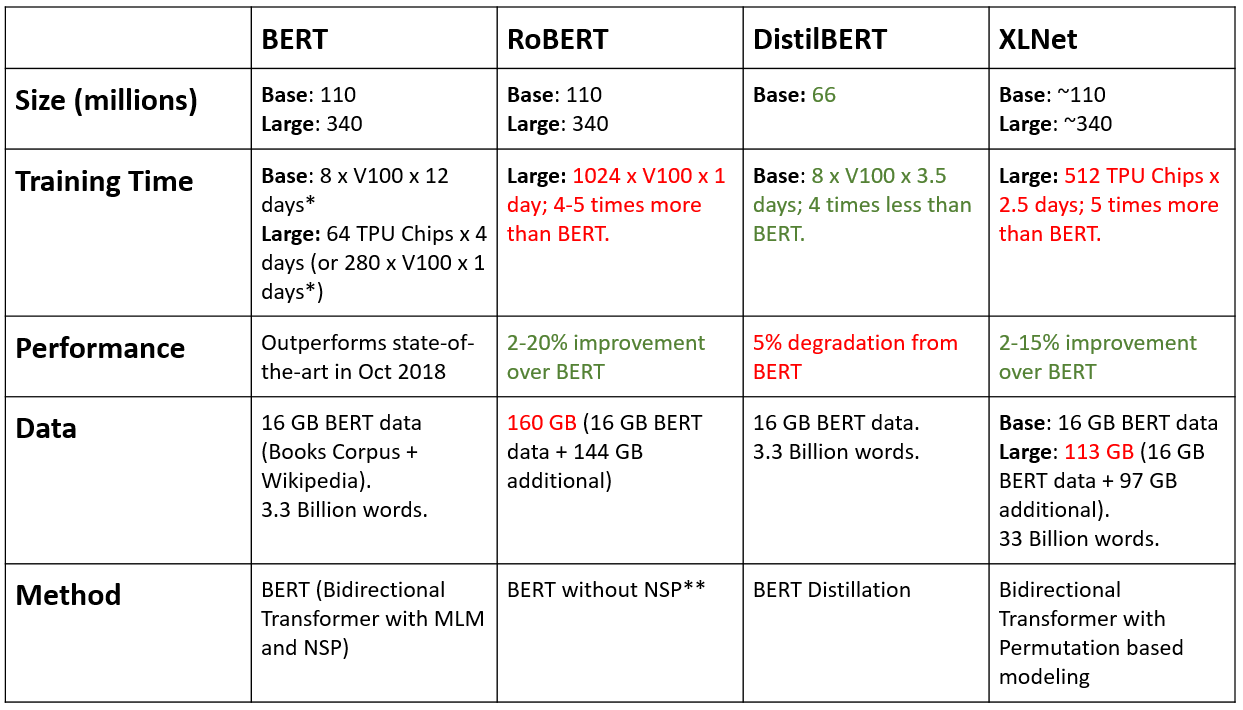

BERT, RoBERTa, DistilBERT, XLNet — which one to use? | by Suleiman Khan, Ph.D. | Towards Data Science

Tool for visualizing attention in the Transformer model (BERT, GPT-2, Albert, XLNet, RoBERTa, CTRL, etc.) | PythonRepo

Transformers | Fine-tuning RoBERTa with PyTorch | by Peggy Chang | Towards Data Science | Towards Data Science

SimpleRepresentations: BERT, RoBERTa, XLM, XLNet and DistilBERT Features for Any NLP Task | by Ali Hamdi Ali Fadel | The Startup | Medium

Host Hugging Face transformer models using Amazon SageMaker Serverless Inference | AWS Machine Learning Blog

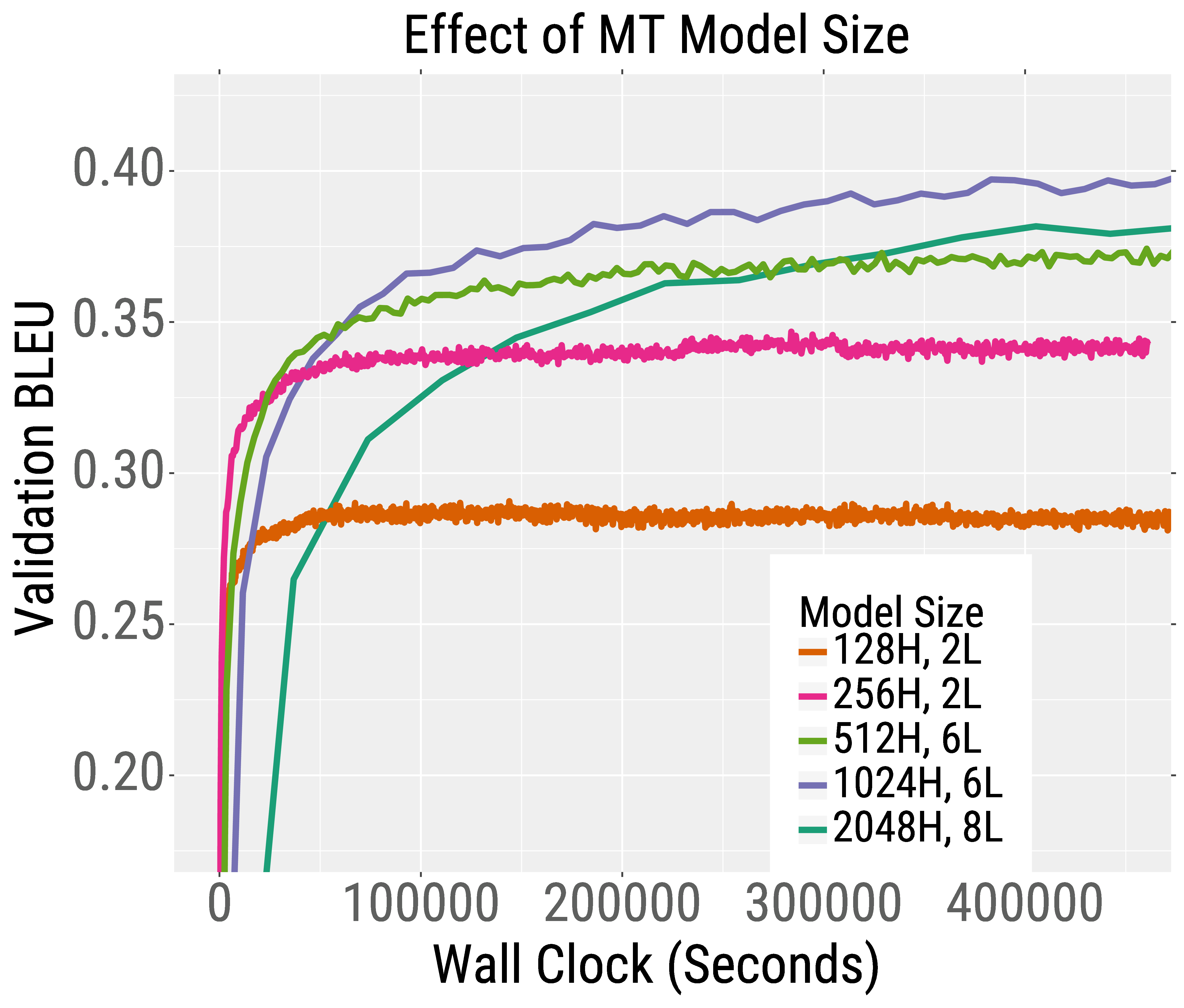

Speeding Up Transformer Training and Inference By Increasing Model Size – The Berkeley Artificial Intelligence Research Blog

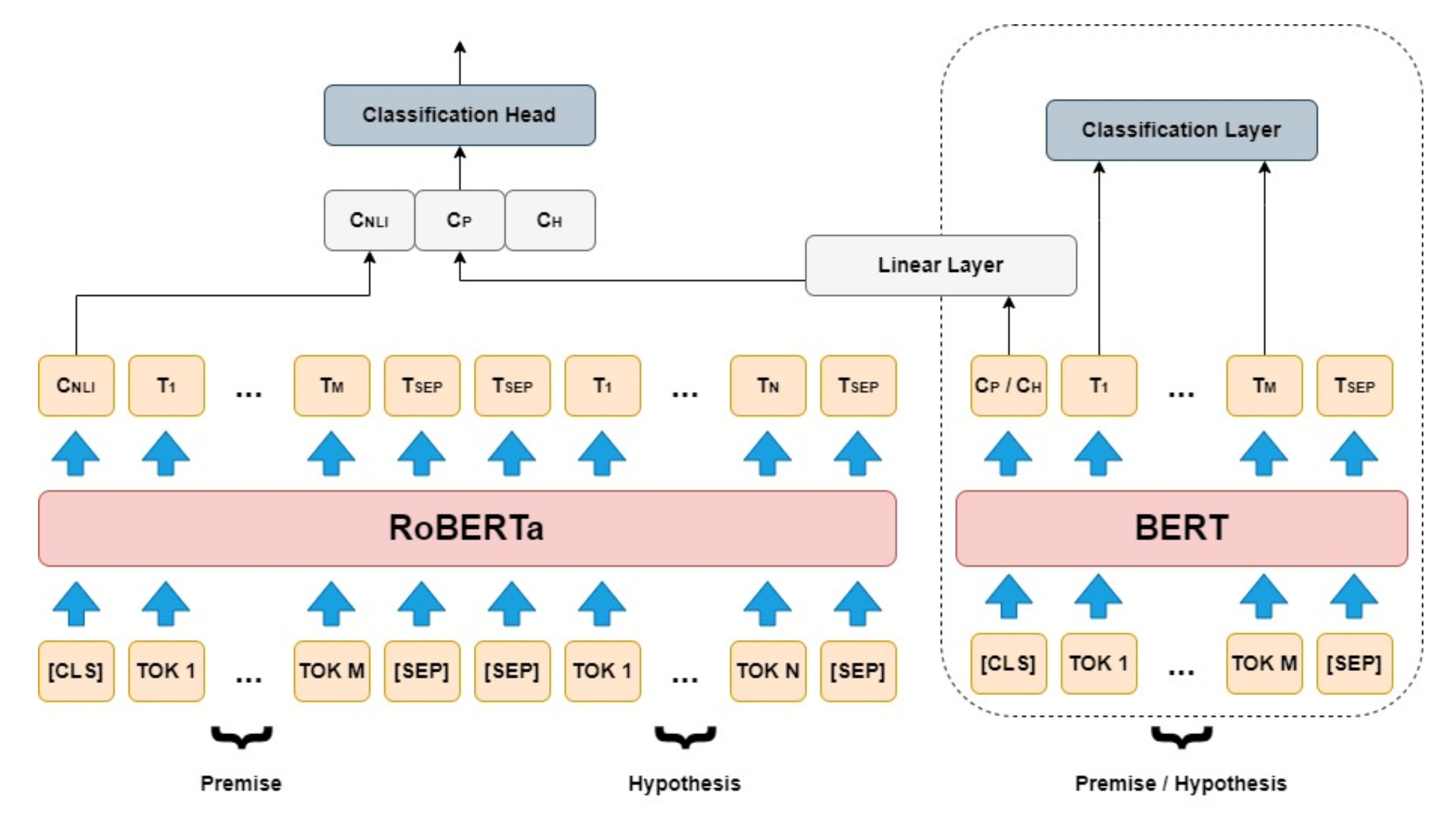

BDCC | Free Full-Text | RoBERTaEns: Deep Bidirectional Encoder Ensemble Model for Fact Verification | HTML

LAMBERT model architecture. Differences with the plain RoBERTa model... | Download Scientific Diagram